Responsible Artificial Intelligence (RAI) is a framework for ensuring that artificial intelligence is developed and deployed ethically and trustily while upholding human rights and societal values. As businesses use AI more often in their operations, reducing risks and improving transparency is important.

According to IDC’s (International Data Corporation) 2024 AI opportunity study, the use of generative AI rose from 55% in 2023 to 75% in 2024, marking a 36% increase.

Responsible development of AI helps organisations comply with regulations, minimise bias, and build stakeholder trust while contributing to sustainable growth.

In this detailed guide, we’ll explore what responsible AI is, how it works, its benefits and challenges, key principles and the future of responsible AI for businesses.

What Is Responsible AI?

Responsible AI is the practice of creating, evaluating, and implementing AI systems in a safe, trustworthy, and ethical manner. This means companies follow clear guidelines to meet legal requirements, cut bias in data and decision-making and build trust among stakeholders.

Responsible AI represents a dynamic combination of essential qualities. It is not only reliable, but it also incorporates a strong awareness of power dynamics and ethical considerations, all while aiming to minimise risks. This approach establishes principles that align the use of AI with business values and regulatory standards, ensuring that technology serves everyone fairly and responsibly.

A recent survey by IDC shows that 91% of organisations use AI technology in 2024. They expect that AI will improve customer experience, business strength, sustainability, and efficiency by more than 24%. Organisations that use RAI solutions also see benefits like better data privacy, improved customer experience, smarter business decisions, and a stronger brand reputation and trust.

Why is Responsible AI Important

Adopting Responsible AI helps protect a company’s reputation and supports compliance with legal and social standards. Businesses practising responsible AI can help mitigate the risk of bias and avoid potential fines or public backlash.

Some of the key benefits of adopting responsible AI include:

1. Accelerate the integration of emerging technologies

Adopting ethical and responsible AI can help businesses integrate new technologies more quickly. When AI systems are designed with fairness, accountability, and transparency, organisations can trust them to support critical operations. This reduces the risk of errors, bias, or security breaches, making it easier to introduce AI-driven solutions without resistance from employees or regulators.

2. Strengthen regulatory adherence

Companies must comply with legal and industry standards, and AI systems are no exception. Responsible AI helps businesses avoid fines, legal challenges, and reputational damage by aligning with data protection laws and governance requirements. By embedding ethical considerations into AI from the outset, organisations reduce the risk of regulatory scrutiny and costly corrective actions later.

3. Establish confidence in AI systems

Trust is a critical factor in the adoption of AI-driven services and solutions. When employees, customers, and stakeholders see that AI is used responsibly, they are more likely to engage with it. Transparent AI models that explain decisions, rather than operate as black boxes, build confidence and encourage wider adoption across the business.

4. Facilitate large-scale AI deployment

For AI to scale effectively across industries, it must be reliable and consistent. Ethical AI ensures that automated processes deliver stable, predictable results. This is essential for businesses looking to apply AI at an enterprise level, where mistakes can be costly. By prioritising responsible AI, organisations can move from small-scale pilots to full-scale implementation with confidence.

5. Preventing Harm

AI is powerful, but in the wrong hands, it can be dangerous. Deepfake technology can spread fake news, AI-powered weapons can be misused, and automated decisions can have life-changing consequences. Responsible AI development ensures that AI is used for good—helping people, not harming them.

6. Keeping Humans in Control

AI should assist humans, not replace them entirely. Human oversight is essential in areas like healthcare, finance, and legal. Responsible AI ensures that people remain in control, making the final decisions and using AI as a tool rather than letting it take over completely.

How Responsible AI Works in the Real World

Here are some of the ways that responsible AI works:

Ethical AI Development

Companies train AI with diverse data to prevent bias. For example, if facial recognition AI is only trained on one particular ethnicity, it might struggle to recognise people of different ethnicities as a result.

AI Regulations and Guidelines

Governments and tech companies create AI laws and ethics boards to set rules. The EU’s AI Act and Google’s AI ethics principles are examples of efforts to regulate AI use.

Human Oversight

AI shouldn’t work on its own without human supervision. AI in hiring, finance, healthcare, or law enforcement should always have human experts double-checking decisions.

Continuous Testing & Improvement

AI is never perfect. Engineers constantly test AI for bias, errors, and security risks, making updates to ensure it stays responsible over time.

Principles of Responsible AI

To ensure AI is used ethically and effectively, organisations must follow some key principles that keep it fair, transparent, and safe. Here are five essential principles of responsible AI:

1. Fairness and Bias Mitigation

AI should work for everyone, not just a select few. However, because AI learns from data, it can pick up biases from the real world. If not handled properly, this can lead to unfair outcomes, such as discrimination in hiring or lending decisions.

To prevent this, developers must carefully check and balance data sets, test for AI bias, and use techniques like diverse data sampling and fairness algorithms. The goal is to create AI that treats all individuals equally, regardless of factors like gender, race, or background.

2. Transparency and Explainability

AI shouldn’t feel like a mysterious "black box." Users and decision-makers need to understand how AI systems arrive at their conclusions. This means making AI models more interpretable by providing clear explanations of their logic and outputs.

The reasoning should be easy to follow if an AI denies someone a loan or suggests a medical treatment. Transparent AI builds trust and ensures people can challenge or correct decisions when necessary.

3. Accountability and Governance

If AI makes a mistake, who takes responsibility? AI systems don’t exist in isolation—there must be human oversight to ensure they are used correctly. Strong governance frameworks are essential to define clear roles and responsibilities, set ethical guidelines, and establish protocols for handling AI-related risks.

Organisations should have dedicated teams to monitor AI compliance and ensure that its use aligns with legal and ethical standards.

4. Data Privacy and Security

AI relies on vast amounts of data, but with that comes a duty to protect it. Users must feel confident that their personal information is safe and handled responsibly.

Companies should follow strict data protection laws, use encryption, anonymise sensitive data, and limit access to only those who truly need it. Without strong privacy and security measures, AI could become a tool for misuse, leading to data breaches and loss of public trust.

5. Continuous Monitoring and Improvement

AI is not a "set it and forget it" technology. It requires ongoing monitoring to ensure it performs as expected over time. Changes in data, new threats, or evolving ethical concerns mean AI solutions must be regularly updated and improved.

Organisations should have systems in place to track AI behaviour, flag issues, and make adjustments when needed. Continuous learning and refinement help AI stay relevant, fair, and effective.

|

“Responsible AI needs to be embedded into every stage of the AI lifecycle, from data collection to ongoing monitoring. That means conducting bias audits early, using Explainability tools to ensure transparency, and setting clear governance policies for accountability. Regular performance reviews, retraining cycles, and user feedback loops keep AI models accurate and aligned with ethical and regulatory standards.” Sean Houghton, Commercial & Operations Director of Aztech IT Solutions |

The Business Case for Ethical AI Implementation

AI-driven insights can lead to breakthroughs in decision-making, customer experience, and operational efficiency. However, organisations must balance innovation with responsibility, ensuring that AI-driven automation does not introduce bias, security gaps, or compliance risks.

Businesses can unlock AI’s full potential while mitigating unintended harm by embedding Responsible AI principles—fairness, transparency, accountability, security, and continuous monitoring.

Here is a hypothetical use case scenario and example of Responsible AI in action.

Scenario

A mid-sized private hospital, Riverview Healthcare, is struggling to manage rising patient readmission rates. It wants to deploy an AI-powered predictive analytics system to identify high-risk patients and deliver proactive care.

However, it faces strict compliance regulations (HIPAA, GDPR for international patients), concerns about algorithmic bias, and potential reputational damage if the AI inaccurately flags certain patient groups.

Objective

- Reduce 30-day Readmissions: Use an AI model to predict which patients are most likely to be readmitted within 30 days, aiming for a 10% reduction in readmission rates.

- Maintain Ethical Standards: Avoid discriminatory outcomes across demographics, ensure patient data privacy, and keep the system transparent to staff and regulators.

Implementation

- Data Governance & Bias Audits

- The hospital forms a cross-functional AI ethics committee (IT, compliance, medical leadership).

- They perform a Responsible AI Readiness Assessment, screening existing patient data for imbalances (e.g., underrepresentation of certain ethnic groups).

- Transparent Model Development

-

- IT teams work with an explainable AI (XAI) framework, enabling clinical staff to see key factors influencing predictions (e.g., past hospital visits, medication adherence).

-

- Ongoing monitoring flags potential bias, with monthly reviews to check if one demographic group faces higher false-positive rates.

- Privacy and Security Measures

-

- Data encryption and multi-factor authentication protect sensitive patient information.

-

- A robust governance policy clarifies who can access AI outputs, how data is stored, and what to do if any ethical issues arise.

Outcomes

- Reduced Readmission Rate by 12%

-

- Within six months, Riverview Healthcare surpassed its original target, lowering readmissions and associated costs.

- Heightened Patient Trust

-

- Transparent disclosures about how AI identifies at-risk patients helped ease concerns, leading to a 5% increase in patient satisfaction scores.

- Strengthened Compliance Posture

-

- Through routine security audits and bias checks, the hospital avoided regulatory penalties and maintained confidence among stakeholders.

- Sustainable Innovation Mindset

-

- Having proven success with Responsible AI, Riverview Healthcare now plans to apply ethical AI in other areas (like resource scheduling and telehealth triage) without compromising compliance or patient safety.

This use case highlights how responsible AI acts as a guardrail (ensuring ethical, compliant deployments) and a catalyst for innovation (unlocking predictive capabilities).

By embedding principles like fairness, transparency, accountability, privacy, and continuous improvement from the outset, organisations can drive measurable outcomes while preserving brand reputation and stakeholder trust.

Enhancing Brand Reputation and Stakeholder Trust

Customers, investors, and regulators are paying closer attention to ethical AI adoption. Businesses that prioritise fairness, security, and compliance are more likely to maintain trust and avoid reputational damage.

Studies have shown that organisations with strong AI governance outperform competitors, as ethical AI builds credibility and fosters long-term stakeholder confidence.

Long-Term Cost Savings

Failing to implement AI responsibly can have significant financial consequences. Bias in AI decision-making can lead to lawsuits, regulatory penalties and public backlash.

Data breaches caused by weak governance can result in millions in legal costs and lost business. Investing in Responsible AI from the outset—through governance frameworks, security protocols, and ethical oversight—is far more cost-effective than dealing with the fallout of a poorly managed system.

|

“An AI model is only as good as the data used to train it and, if the data isn’t up to scratch, the system could end up hallucinating or generating outputs based on biased data. No one wants to fall behind the competition – that goes without saying – but rushing to implement AI solutions could damage your brand beyond repair if you get it wrong, not to mention cost you a significant amount of money.” Josh Barnard – Aztech IT’s Interim Head of Marketing |

Practical Steps to Implement Responsible AI Principles

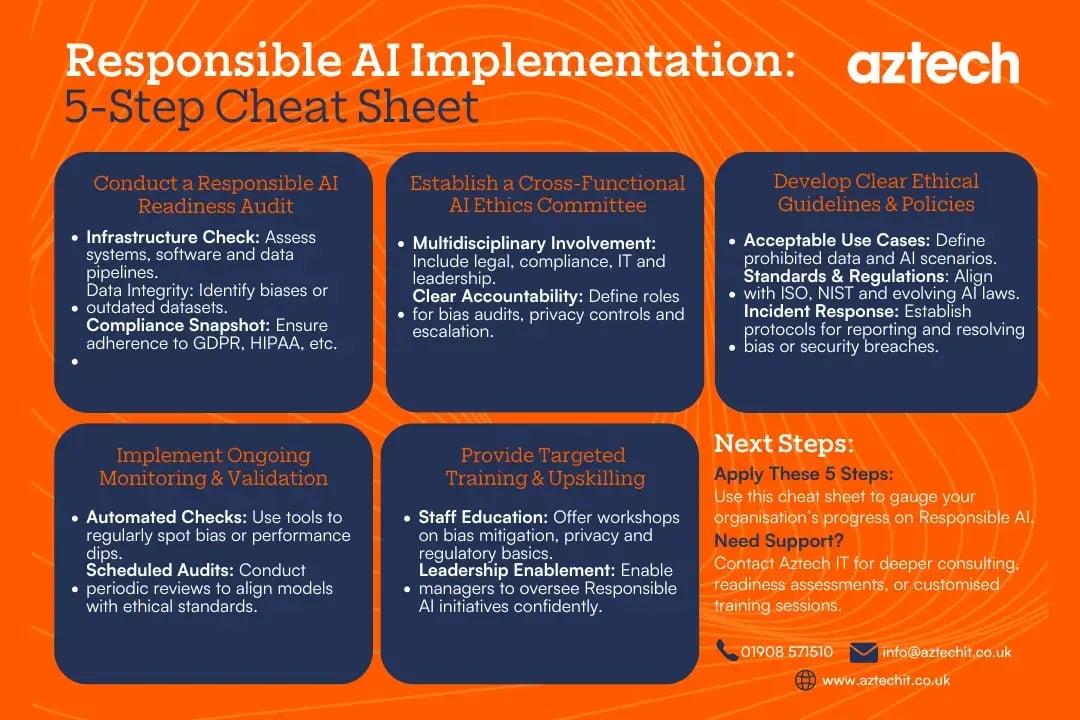

Download the PDF version of the Responsible AI Implementation Cheat Sheet.

Download the PDF version of the Responsible AI Implementation Cheat Sheet.

Here’s a step-by-step approach to implementing responsible AI principles into your organisation.

Step 1: Conduct a Comprehensive AI Readiness Audit

Begin by checking your current systems and processes. This means looking at your IT infrastructure, the quality of your data, and how your organisation works overall. It is important to spot any weaknesses, especially in security and compliance, before you start using AI.

Assess current infrastructure, data quality, and organisational processes

- Review IT infrastructure to determine if it can support AI workloads effectively. Consider cloud capabilities, computational power, and data storage requirements.

- Analyse data quality, ensuring it is complete, unbiased, and representative. Poor data leads to unreliable AI solutions.

- Map out current organisational processes to understand how AI will integrate into workflows and decision-making structures.

Identify gaps in security and compliance

- Conduct a cyber security assessment to check if data and AI models are protected against threats such as data breaches or adversarial attacks.

- Review regulatory compliance (e.g., GDPR, HIPAA, ISO 27001) to ensure AI implementations align with data protection laws.

- Identify areas where AI might introduce risks, such as automation bias or ethical concerns, and develop mitigation strategies.

Step 2: Form Cross-Functional AI Ethics Committees

Create a team that includes people from different parts of the organisation—legal, compliance, IT and leadership. This diverse team will work together to make sure that all AI decisions are ethical and responsible. Assign clear roles so that everyone knows who is in charge.

Involve legal, compliance, IT, and leadership stakeholders

- Legal teams help interpret and apply AI regulations and ensure that AI use aligns with existing laws.

- Compliance officers monitor ethical risks and ensure AI systems meet industry standards.

- IT professionals provide technical insights, ensuring AI models are robust, fair, and secure.

- Leadership sets the vision for AI adoption and ensures AI aligns with business objectives.

Assign accountability for oversight and decision-making

- Clearly define who is responsible for AI governance within the organisation.

- Establish reporting structures to escalate ethical concerns or AI-related risks.

- Assign specific roles for reviewing AI decisions, addressing biases, and ensuring transparency.

Step 3: Develop Clear Ethical Guidelines and Responsible AI Policies

Write down clear rules for using AI. These guidelines should explain what AI can and cannot be used for, how to handle data safely, and what to do if there is a problem. It is a good idea to follow recognised industry standards like ISO and NIST frameworks.

- Outline what AI can and cannot be used for within the organisation. For example, ensure AI is not used for discriminatory decision-making or invasive surveillance.

- Establish data-handling policies, ensuring transparency in how data is collected, processed, and stored.

- Define clear escalation pathways for when AI solutions produce biased, inaccurate, or harmful outcomes.

Align with industry standards and regulations (e.g., ISO, NIST frameworks)

- Follow established frameworks like ISO/IEC 38507 (Governance of AI) and NIST’s AI Risk Management Framework to guide responsible AI practices.

- Ensure AI systems comply with laws such as GDPR (General Data Protection Regulation) and sector-specific rules.

- Continuously update policies to reflect evolving AI regulations and ethical challenges.

Step 4: Implement Ongoing Monitoring and Validation

Once your AI systems are running, you need to keep a close eye on them. Set up automated tests to spot any biases or declines in performance. Regular checks will help you make sure that the AI continues to meet ethical standards.

Set up automated tests to detect bias or performance degradation

- Implement bias detection tools to analyse AI outputs for unintended discrimination. For example, test AI-driven recruitment tools to ensure fairness across gender and ethnic groups.

- Deploy performance monitoring systems to track AI efficiency and flag anomalies.

- Use explainable AI (XAI) techniques to make AI decisions transparent and auditable.

Schedule periodic reviews to ensure AI outputs align with ethical standards

- Conduct regular audits to assess AI’s compliance with organisational policies and regulatory requirements.

- Perform ethical impact assessments before launching major AI-driven initiatives.

- Engage external reviewers to provide an unbiased assessment of AI risks.

Step 5: Provide Targeted Training and Upskilling

Train your staff in responsible AI practices. Make sure they understand data privacy, ethical guidelines, and the basics of how AI works. This training will give IT managers the confidence to lead AI projects without always needing outside help.

Train staff on responsible AI principles, data privacy and general AI literacy

- Provide workshops on AI ethics, bias mitigation, and compliance best practices.

- Educate employees on data privacy regulations and their impact on AI development and deployment.

- Offer AI literacy programs to help staff understand how AI models work and how they influence decision-making.

Enable IT Managers to lead with confidence and reduce reliance on external consultants

- Equip IT leaders with the skills to oversee AI implementations independently.

- Train AI teams to troubleshoot AI solutions, identify biases, and manage compliance requirements.

- Reduce dependency on third-party AI consultants by building in-house expertise.

Common Challenges in Implementing Responsible AI

According to a survey by IDC on Microsoft and Responsible AI, more than 30% of respondents say that the biggest obstacle to using and expanding AI is the lack of governance and risk management solutions.

Here are some of the common challenges in implementing responsible AI for businesses:

1. Budget Concerns

AI isn’t cheap. Developing, testing, and maintaining AI systems require significant investment. Companies need to spend on high-quality data, skilled professionals, and computing power.

Smaller businesses, in particular, may struggle to justify these costs when the return on investment isn’t immediately clear. RAI also demands additional spending on ethical audits, AI bias detection, and compliance – all of which add to the financial burden.

2. Time and Resource Constraints

AI implementation isn’t a quick fix. Training AI models, ensuring they are fair and unbiased, and integrating them with existing systems take time.

Skilled AI professionals and data scientists are in high demand, and businesses often lack the in-house expertise to manage AI projects effectively. This means companies must either invest in upskilling their teams or rely on external experts, which require time and resources that many businesses can’t spare.

3. Fear of Disruption

Many organisations hesitate to adopt AI because they fear it will disrupt their current workflows. Employees may worry about job security, while leaders may be concerned about potential errors AI could introduce.

Implementing AI without a clear strategy, it can cause confusion, inefficiencies, or even legal issues. Companies need a structured approach to change management, ensuring that AI complements human roles rather than replacing them.

4. Scepticism about AI’s Value

Not everyone is convinced that AI is worth the hype. Some business leaders see it as a costly experiment with no guaranteed success. They may be wary of investing in AI if they don’t fully understand how it works or how it will benefit their organisation.

There’s also the issue of trust—if AI systems make decisions in a way that isn’t transparent, it’s harder for people to accept them. Overcoming scepticism requires clear communication, real-world case studies, and demonstrating tangible benefits over time.

The Future of Responsible AI: Productivity, Cyber Security, and Compliance

As companies look to AI to accelerate decision-making and streamline operations, they must balance these gains with security, compliance, and ethical considerations.

Responsible AI provides the foundation for achieving this balance—ensuring innovation does not come at the expense of cyber security, regulatory alignment, or trust.

Balancing Productivity and Security

Organisations are under pressure to improve efficiency, reduce costs and deliver faster results. AI is a key driver of this transformation, automating repetitive tasks, enhancing decision-making and supporting IT teams that are stretched thin. Predictive analytics, machine learning models and AI-driven automation can cut down on manual workloads, freeing employees to focus on higher-value work.

However, adopting AI without the right safeguards can create unintended security risks. AI-powered systems rely on vast amounts of data, and, without governance, they can become an entry point for cyber threats or introduce operational weaknesses.

Responsible AI helps businesses harness automation safely—by embedding data privacy and security into AI design, maintaining transparency in decision-making and ensuring models are trained on reliable, unbiased data.

Cyber Security Considerations in AI Adoption

AI can strengthen cyber security by detecting and responding to threats faster than human analysts. Machine learning models can identify unusual patterns, flagging potential breaches before they escalate and predictive analytics helps to anticipate vulnerabilities, enabling IT teams to act proactively rather than reactively.

At the same time, AI itself can become a security risk if not properly managed. AI-driven automation can expand an organisation’s attack surface, introducing new vulnerabilities that cyber criminals may exploit.

Poorly secured AI solutions can be manipulated through adversarial attacks, where threat actors subtly alter inputs to deceive the system. Responsible AI governance promotes cyber security as a priority—defining strict access controls, regularly auditing AI models and implementing protocols to detect and mitigate adversarial threats.

According to research conducted by Microsoft, 87% of UK organisations are vulnerable to cyberattacks in the age of AI.

As per the UK government, 47% of organisations that use AI do not have any specific AI cyber security practices or processes in place.

Evolving Regulatory Environment and Compliance Imperatives

AI regulation is evolving rapidly, and businesses must prepare for stricter oversight. The EU AI Act has introduced new requirements for AI transparency, bias mitigation and risk management, categorising AI applications by risk level and imposing compliance obligations accordingly.

Meanwhile, several U.S. states are moving forward with AI-specific legislation and global regulatory bodies are exploring similar measures. Businesses operating in multiple regions will need flexible compliance strategies to keep up with these developments.

Uncertainty remains around how AI regulations will take shape but waiting until new laws are finalised is not a viable strategy. Organisations that proactively implement Responsible AI frameworks—embedding transparency, accountability and security—will be in a stronger position to adapt when new rules come into effect as it’s likely that any new regulations will centre around these principles.

Industry-Specific Compliance Challenges

Compliance requirements vary across industries, with financial services and healthcare being more heavily regulated than others. Financial institutions using AI for risk assessment and fraud detection must navigate strict protocols around data privacy and algorithmic decision-making.

In healthcare, AI-driven diagnostics and patient management tools must comply with GDPR, and HIPAA and should have a strong focus on bias mitigation. There was a recent case of an AI algorithm that “…used health costs as a proxy for health needs and falsely concluded that Black patients are healthier than equally sick white patients, as less money was spent on them.”

This highlights why Responsible AI is critical—without careful oversight, AI can reinforce existing disparities rather than improve outcomes. Bias mitigation must be a core requirement, not an afterthought, ensuring that AI-driven decisions are fair, accurate, and aligned with ethical medical practices.

For businesses operating in these sectors, a Responsible AI framework goes beyond futureproofing against new laws; it helps to build trust in an increasingly untrusting world and will protect your company from legal risks and reputational damage.

Approximately 71% of mid-tier managing partners anticipate their firms will invest in artificial intelligence (AI) over the next three years as products evolve and become more accessible, according to a recent ICAEW report.

AI Governance as a Competitive Differentiator

AI governance is often seen as a compliance measure, but forward-thinking organisations recognise it as a competitive advantage. Establishing clear policies around AI development, deployment and monitoring reduces regulatory risks and builds trust with customers, investors and partners.

Well-governed AI systems provide greater reliability, reduce operational risks and demonstrate a commitment to ethical innovation.

Why Governance Sets You Apart

Businesses that proactively manage AI governance are better positioned to demonstrate the reliability of their AI-driven processes. Consumers and enterprise buyers are increasingly wary of AI decision-making, particularly in areas like hiring, lending and healthcare. By embedding explainability and fairness into AI solutions, companies can differentiate themselves as responsible and trustworthy.

AI governance also plays a key role in productivity gains. A well-governed AI system is easier to maintain, less prone to errors and more resilient to external forces. Boards and executive teams are more likely to approve AI investments when they can see a clear, structured approach to risk management and compliance.

Recent data from the UK government shows that most businesses in the UK are using AI governance frameworks. Specifically, 84% of companies have a person in charge of their AI practices. However, while many leaders say they are accountable for how AI is used, the responsibility is often shared among different people or groups within the company.

Staying Agile Amid Rapid Changes

It’s not hard to recognise that the current AI landscape is like the shifting sands of a desert. Organisations that can adapt quickly will gain a competitive edge. As regulations, customer expectations and AI capabilities continue to evolve, companies that have established strong governance policies will respond faster to these changes, avoiding costly disruptions.

For industries where compliance is a major factor—such as financial services and healthcare—this agility is particularly valuable. Businesses that wait until new regulations are enforced will struggle to retrofit governance into existing AI systems, while those that have invested in RAI from the outset will be well-positioned to scale their AI initiatives confidently.

Frequently Asked Questions (FAQs)

1. What is responsible AI governance?

Responsible AI governance refers to the framework and practices that organisations implement to ensure AI technologies are developed and deployed in an ethical, fair, and transparent manner. It involves setting up guidelines, policies, and structures to monitor AI systems throughout their lifecycle, ensuring accountability, compliance with legal and ethical standards, and addressing potential biases. Governance also includes creating oversight mechanisms to manage risks associated with AI, such as unintended consequences or misuse of technology.

2. What is a Responsible AI standard?

A responsible AI standard is a set of agreed-upon principles, practices, and regulations that guide the development and use of AI technologies in a manner that is ethical, transparent, and beneficial to society. These standards for building AI systems focus on fairness, transparency, accountability, privacy, and inclusivity. The aim is to ensure that AI systems are aligned with human values and public interests while minimising harm. In the UK, organisations may refer to guidelines from institutions like the Centre for Data Ethics and Innovation (CDEI), ISO/IEC 23894 for AI standards or ISO/IEC 42001 for AI Management Systems (AIMs).

3. What is the difference between responsible AI, trustworthy AI and ethical AI?

Responsible AI refers to AI that is developed and deployed in a way that prioritises fairness, accountability, transparency, and the minimisation of harm. It involves ensuring that AI systems are used to benefit societal values, comply with regulations, and reduce negative impacts.

Trustworthy AI is similar but specifically focuses on the reliability and dependability of AI systems, ensuring they perform as intended and in a secure, safe manner. Trustworthy AI builds confidence among users by adhering to established ethical frameworks and guidelines and offering assurances about the system’s capabilities and limitations.

Ethical AI addresses the moral implications of AI technology, ensuring that AI decisions are aligned with ethical principles such as model fairness, justice, and respect for human rights. Ethical AI considers how AI impacts individuals and society, aiming to avoid harm and ensure just outcomes.

4. What is something responsible AI can help mitigate?

Responsible AI can help mitigate several issues related to the use of AI systems, including:

- Bias and Discrimination: By ensuring that AI solutions are developed using diverse and representative data, responsible AI practices help prevent biased outcomes that may unfairly disadvantage certain groups.

- Privacy Violations: Responsible AI frameworks ensure that AI systems comply with data protection regulations (e.g., GDPR) and respect users' privacy by safeguarding personal data and ensuring transparency in data collection and usage.

- Unintended Consequences: Responsible AI can reduce the risk of AI systems making harmful or unintended decisions, such as automated systems that unintentionally reinforce harmful stereotypes or contribute to inequality.

-1.png?width=552&height=678&name=text-image%20module%20desktop%20(4)-1.png)

.png?width=2000&name=Case%20study%20(21).png)

-2.png?width=422&height=591&name=text%20image%20tablet%20(31)-2.png)

-2.png?width=1366&height=768&name=Blog%20Hero%20Banners%20(4)-2.png)

-2.png?width=1366&height=768&name=Blog%20Hero%20Banners%20(5)-2.png)